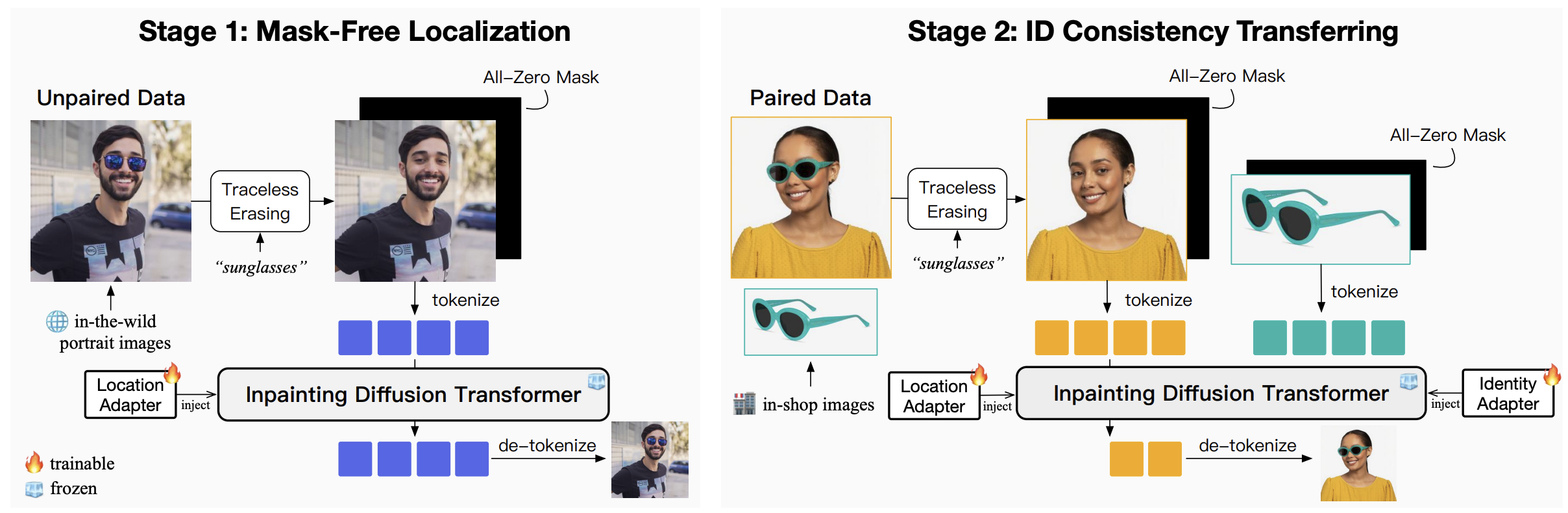

Virtual Try-ON (VTON) is a practical and widely-applied task, for which most of existing works focus on clothes. This paper presents OmniTry, a unified framework that extends VTON beyond garment to encompass any wearable objects, e.g., jewelries and accessories, with mask-free setting for more practical application. When extending to various types of objects, data curation is challenging for obtaining paired images, i.e., the object image and the corresponding try-on result. To tackle this problem, we propose a two-staged pipeline: For the first stage, we leverage large-scale unpaired images, i.e., portraits with any wearable items, to train the model for mask-free localization. Specifically, we repurpose the inpainting model to automatically draw objects in suitable positions given an empty mask. For the second stage, the model is further fine-tuned with paired images to transfer the consistency of object appearance. We observed that the model after the first stage shows quick convergence even with few paired samples. OmniTry is evaluated on a comprehensive benchmark consisting of 12 common classes of wearable objects, with both in-shop and in-the-wild images. Experimental results suggest that OmniTry shows better performance on both object localization and ID-preservation compared with existing methods. The code, model weights, and evaluation benchmark of OmniTry will be made publicly available.

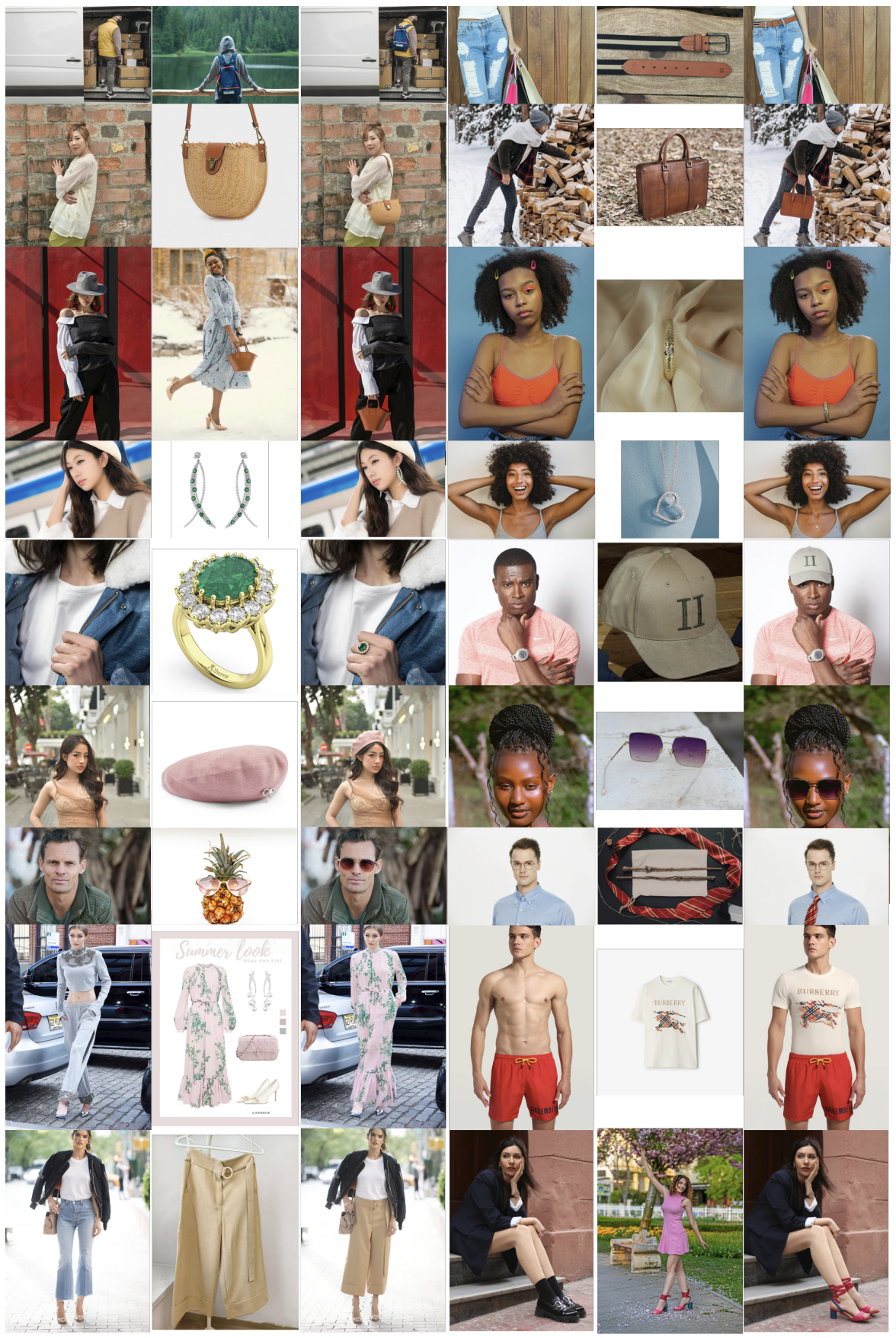

Evaluation results on the OmniTry-Bench containing 12 main classes of wearable objects.

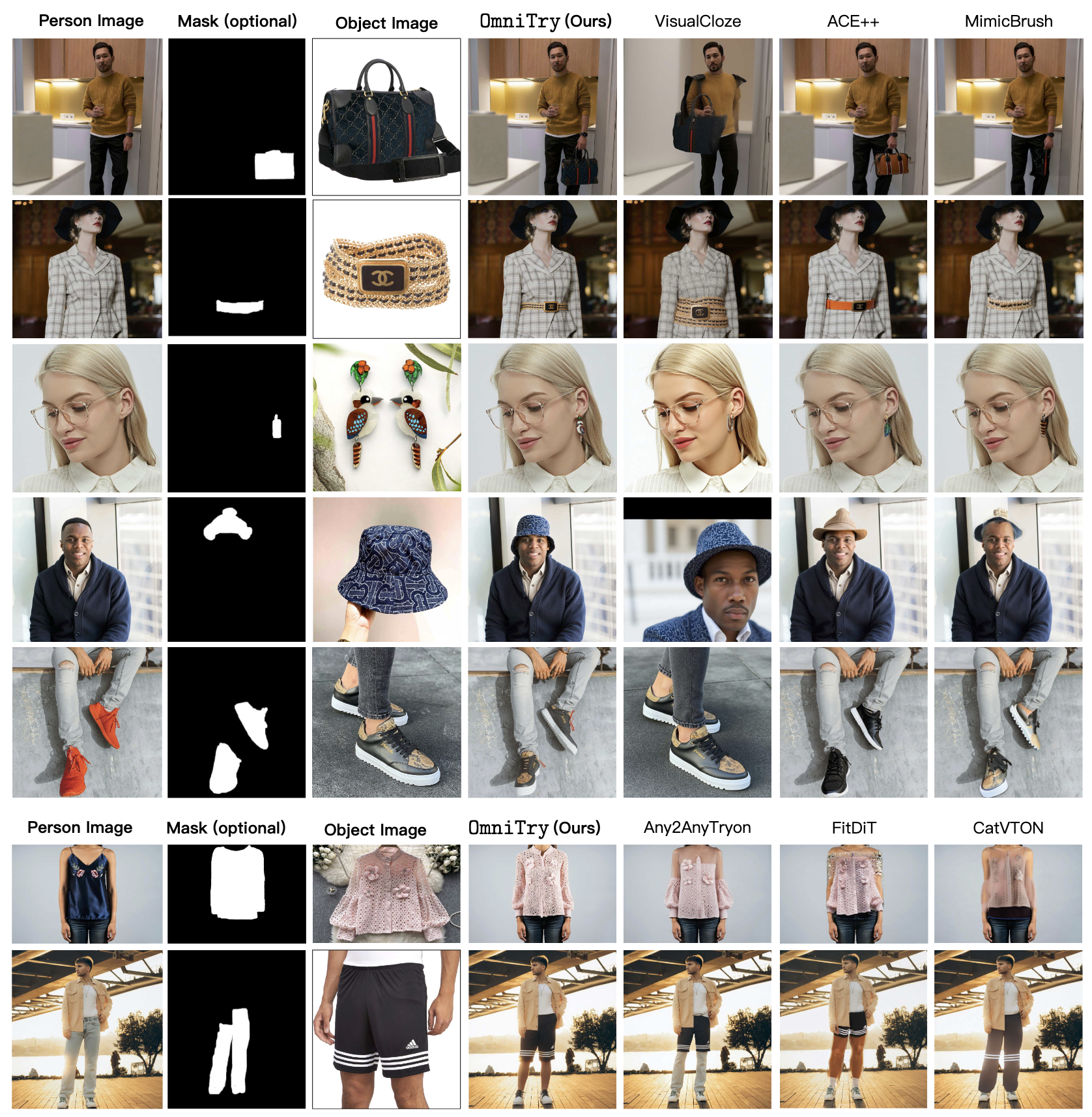

Comparison with existing methods.

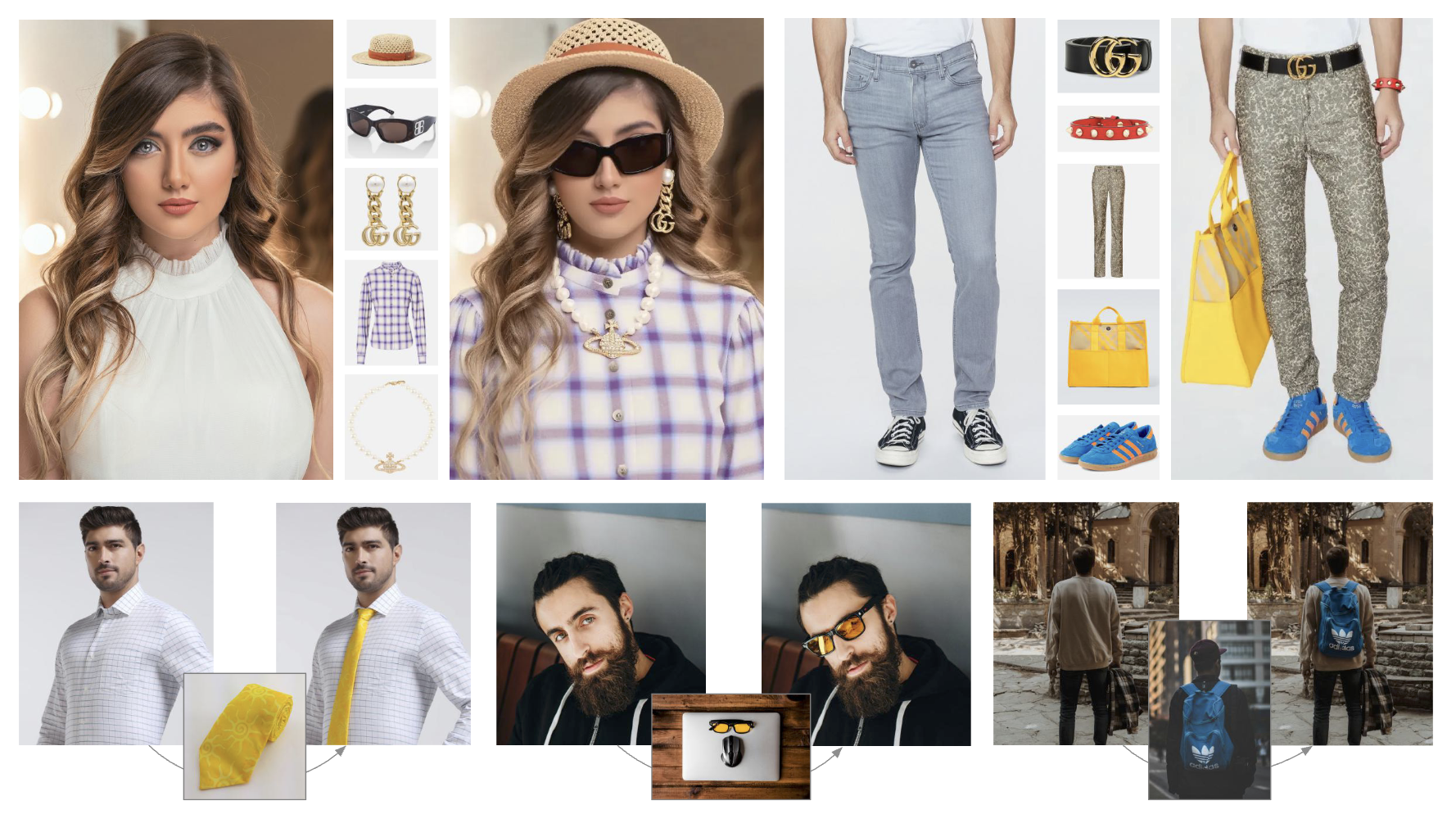

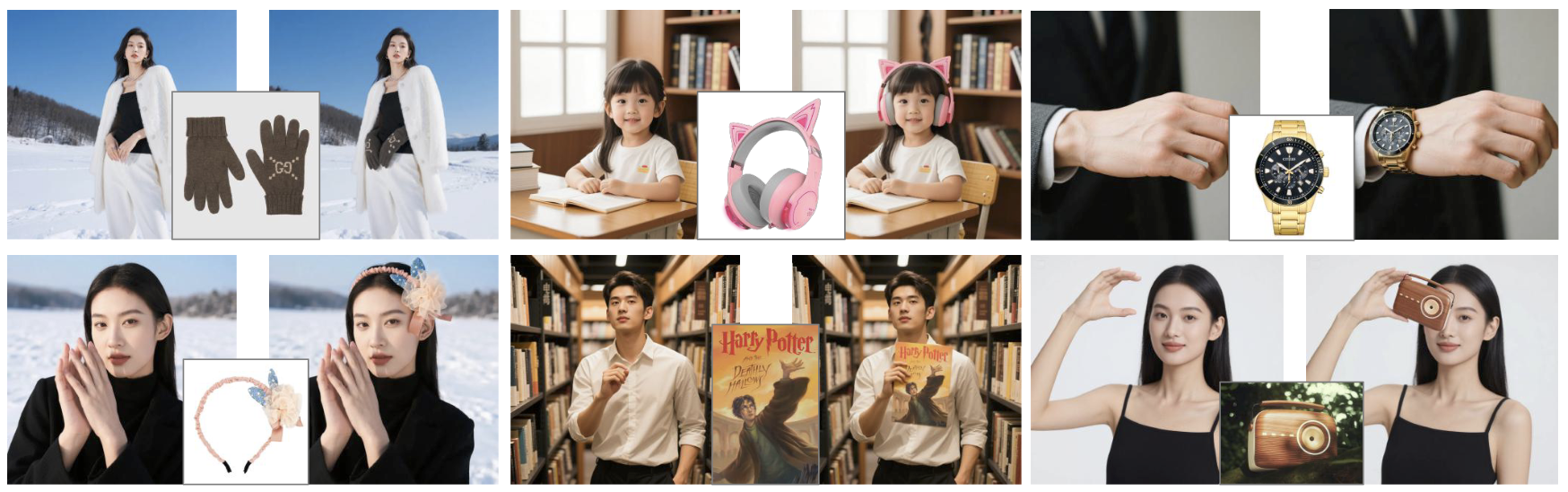

Extending OmniTry to unconmmon classes.

The two-staged training pipeline of OmniTry. The first stage is built on in-the-wild portrait images to add wearable object onto the person in mask-free manner. The second stage introduces in-shop paired images, and targets to control the consistency of object appearance.

@article{feng2025omnitry,

title={OmniTry: Virtual Try-On Anything without Masks},

author={Feng, Yutong and Zhang, Linlin and Cao, Hengyuan and Chen, Yiming and Feng, Xiaoduan and Cao, Jian and Wu, Yuxiong and Wang, Bin},

journal={arXiv preprint arXiv:2508.13632},

year={2025}

}